What Do You Call Each 1 Or 0 Used In The Representation Of Computer Data?

- 7.4.one $.25, bytes, and words

- 7.4.2 Binary, Octal, and Hexadecimal

- 7.4.3 Numbers

- 7.4.3.1 Integers

- 7.iv.3.2 Real numbers

- 7.4.4 Example written report: Network traffic

- seven.4.5 Text

- seven.four.half-dozen Data with units or labels

- 7.4.half dozen.1 Dates

- 7.iv.6.2 Money

- seven.four.vii Binary values

- 7.iv.viii Retentiveness for processing versus retention for storage

seven.4 Calculator Memory

Given that we are always going to store our data on a computer, it makes sense for united states of america to observe out a trivial bit about how that information is stored. How does a computer store the letter of the alphabet `A' on a hard drive? What nigh the value ![]() ?

?

Information technology is useful to know how to store information on a computer because this will allow us to reason about the amount of space that will be required to store a data fix, which in turn will let usa to determine what software or hardware we will need to exist able to work with a data prepare, and to decide upon an appropriate storage format. In order to access a data fix correctly, it tin can also exist useful to know how data has been stored; for example, there are many ways that a simple number could be stored. We will too expect at some important limitations on how well information can be stored on a computer.

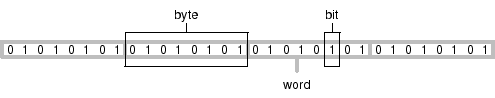

7.4.1 $.25, bytes, and words

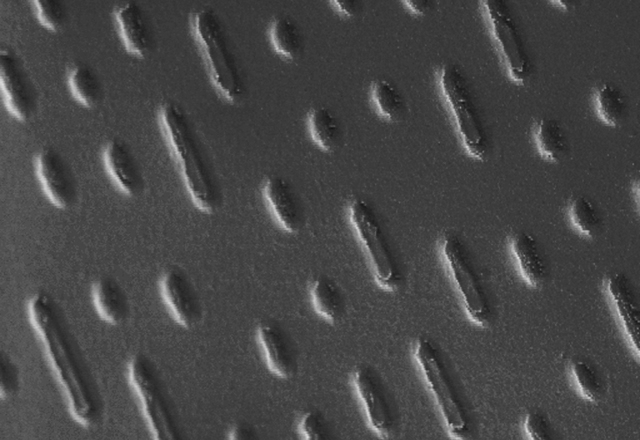

The surface of a CD magnified many times to evidence the pits in the surface that encode information. 7.2

The nearly fundamental unit of measurement of computer memory is the bit. A scrap can be a tiny magnetic region on a hard disk, a tiny dent in the reflective material on a CD or DVD, or a tiny transistor on a memory stick. Whatever the concrete implementation, the important thing to know about a scrap is that, similar a switch, information technology can only take one of 2 values: it is either "on" or "off".

A collection of 8 bits is chosen a byte and (on the bulk of computers today) a collection of 4 bytes, or 32 bits, is called a word. Each individual data value in a data set up is commonly stored using one or more bytes of memory, only at the lowest level, any data stored on a computer is just a big collection of $.25. For instance, the outset 256 $.25 (32 bytes) of the electronic format of this book are shown below. At the everyman level, a data set is just a series of zeroes and ones like this.

00100101 01010000 01000100 01000110 00101101 00110001 00101110 00110100 00001010 00110101 00100000 00110000 00100000 01101111 01100010 01101010 00001010 00111100 00111100 00100000 00101111 01010011 00100000 00101111 01000111 01101111 01010100 01101111 00100000 00101111 01000100 00100000

The number of bytes and words used for an individual data value will vary depending on the storage format, the operating system, and even the computer hardware, merely in many cases, a single letter or character of text takes up one byte and an integer, or whole number, takes upward one give-and-take. A existent or decimal number takes up i or two words depending on how it is stored.

For example, the text "hello" would take upwards v bytes of storage, one per character. The text "12345" would also crave v bytes. The integer 12,345 would accept up 4 bytes (1 word), as would the integers 1 and 12,345,678. The real number 123.45 would accept upwardly 4 or viii bytes, as would the values 0.00012345 and 12345000.0.

7.four.ii Binary, Octal, and Hexadecimal

A piece of estimator memory can exist represented by a series of 0's and 1'due south, with ane digit for each fleck of memory; the value 1 represents an "on" chip and a 0 represents an "off" fleck. This annotation is described every bit binary class. For instance, beneath is a single byte of memory that contains the alphabetic character `A' (ASCII lawmaking 65; binary 1000001).

01000001

A single word of retention contains 32 bits, and then it requires 32 digits to stand for a word in binary form. A more convenient notation is octal, where each digit represents a value from 0 to 7. Each octal digit is the equivalent of three binary digits, so a byte of memory can be represented by 3 octal digits.

Binary values are pretty easy to spot, but octal values are much harder to distinguish from normal decimal values, so when writing octal values, it is common to precede the digits by a special character, such every bit a leading `0'.

Equally an example of octal form, the binary code for the character `A' splits into triplets of binary digits (from the right) like this: 01 000 001. So the octal digits are 101, commonly written 0101 to emphasize the fact that these are octal digits.

An even more efficient way to stand for memory is hexadecimal class. Hither, each digit represents a value between 0 and 16, with values greater than 9 replaced with the characters a to f. A single hexadecimal digit corresponds to 4 $.25, so each byte of retention requires merely ii hexadecimal digits. Equally with octal, information technology is common to precede hexdecimal digits with a special character, due east.g., 0x or #. The binary form for the character `A' splits into ii quadruplets: 0100 0001. The hexadecimal digits are 41, unremarkably written 0x41 or #41.

Another standard do is to write hexadecimal representations equally pairs of digits, respective to a single byte, separated by spaces. For example, the memory storage for the text "just testing" (12 bytes) could be represented as follows:

6a 75 73 74 twenty 74 65 73 74 69 6e 67

When displaying a block of computer memory, another standard practice is to present three columns of data: the left column presents an kickoff, a number indicating which byte is shown first on the row; the centre column shows the actual retentivity contents, typically in hexadecimal grade; and the correct cavalcade shows an estimation of the memory contents (either characters, or numeric values). For instance, the examination "only testing" is shown below complete with offset and character brandish columns.

0 : 6a 75 73 74 xx 74 65 73 74 69 6e 67 | but testing

We will apply this format for displaying raw blocks of memory throughout this section.

7.iv.3 Numbers

Recall that the virtually bones unit of measurement of memory, the bit, has two possible states, "on" or "off". If we used one bit to store a number, nosotros could use each dissimilar state to stand for a dissimilar number. For example, a bit could be used to represent the numbers 0, when the bit is off, and one, when the bit is on.

Nosotros volition need to store numbers much larger than i; to do that we need more than bits.

If we use two bits together to store a number, each chip has ii possible states, and so there are iv possible combined states: both bits off, first flake off and second flake on, first bit on and second flake off, or both bits on. Again using each state to represent a different number, we could shop iv numbers using 2 bits: 0, 1, 2, and 3.

The settings for a series of bits are typically written using a 0 for off and a i for on. For example, the four possible states for two bits are 00, 01, 10, 11. This representation is chosen binary notation.

In full general, if we use k $.25, each bit has two possible states, and the $.25 combined can represent ii 1000 possible states, so with 1000 bits, we could represent the numbers 0, i, 2 up to two k - 1.

7.four.3.ane Integers

Integers are normally stored using a word of memory, which is 4 bytes or 32 $.25, so integers from 0 up to four,294,967,295 (232 - 1) can be stored. Beneath are the integers 1 to 5 stored equally four-byte values (each row represents one integer).

0 : 00000001 00000000 00000000 00000000 | 1 4 : 00000010 00000000 00000000 00000000 | 2 8 : 00000011 00000000 00000000 00000000 | iii 12 : 00000100 00000000 00000000 00000000 | four xvi : 00000101 00000000 00000000 00000000 | 5

This may wait a little strange; inside each byte (each block of eight bits), the $.25 are written from right to left like we are used to in normal decimal note, merely the bytes themselves are written left to right! It turns out that the estimator does not heed which order the bytes are used (as long as we tell the computer what the lodge is) and most software uses this left to correct order for bytes. 7.iii

Two problems should immediately exist apparent: this does not permit for negative values, and very large integers, two32 or greater, cannot be stored in a word of memory.

In practice, the commencement problem is solved by sacrificing one chip to indicate whether the number is positive or negative, so the range becomes -2,147,483,647 to 2,147,483,647 ( ![]() ).

).

The 2d problem, that we cannot store very big integers, is an inherent limit to storing data on a computer (in finite memory) and is worth remembering when working with very large values. Solutions include: using more memory to store integers, eastward.g., ii words per integer, which uses up more memory, so is less memory-efficient; storing integers as existent numbers, which can innovate inaccuracies (see below); or using arbitrary precision arithmetic, which uses as much retentivity per integer as is needed, merely makes calculations with the values slower.

Depending on the computer language, information technology may likewise be possible to specify that simply positive (unsigned) integers are required (i.eastward., reclaim the sign bit), in club to proceeds a greater upper limit. Conversely, if only very small integer values are needed, information technology may be possible to use a smaller number of bytes or even to piece of work with only a couple of bits (less than a byte).

7.iv.3.2 Real numbers

Real numbers (and rationals) are much harder to store digitally than integers.

Recollect that k bits tin represent 2 1000 dissimilar states. For integers, the first state can represent 0, the 2nd state can represent 1, the tertiary country can represent 2, and so on. We can only go every bit high every bit the integer 2 k - 1, but at least we know that we can account for all of the integers up to that point.

Unfortunately, we cannot practice the aforementioned thing for reals. We could say that the first state represents 0, but what does the 2nd land represent? 0.i? 0.01? 0.00000001? Suppose we chose 0.01, so the outset state represents 0, the second land represents 0.01, the tertiary state represents 0.02, and so on. Nosotros tin now simply go as high as 0.01 x (2 k - 1), and we have missed all of the numbers between 0.01 and 0.02 (and all of the numbers betwixt 0.02 and 0.03, and infinitely many others).

This is some other of import limitation of storing information on a computer: there is a limit to the precision that nosotros can achieve when nosotros shop existent numbers. Most existent values cannot be stored exactly on a estimator. Examples of this problem include non only exotic values such as transcendental numbers (e.grand., ![]() and e ), just as well very simple everyday values such equally

and e ), just as well very simple everyday values such equally ![]() or even 0.1. This is non as dreadful equally information technology sounds, because even if the exact value cannot exist stored, a value very very close to the true value tin be stored. For case, if we use eight bytes to store a real number then we tin shop the distance of the world from the sunday to the nearest millimetre. So for practical purposes this is commonly not an result.

or even 0.1. This is non as dreadful equally information technology sounds, because even if the exact value cannot exist stored, a value very very close to the true value tin be stored. For case, if we use eight bytes to store a real number then we tin shop the distance of the world from the sunday to the nearest millimetre. So for practical purposes this is commonly not an result.

The limitation on numerical accuracy rarely has an effect on stored values considering it is very hard to obtain a scientific measurement with this level of precision. Still, when performing many calculations, even tiny errors in stored values tin can accrue and result in significant problems. Nosotros will revisit this issue in Chapter 11. Solutions to storing real values with total precision include: using even more retentivity per value, particularly in working, (due east.g., eighty bits instead of 64) and using arbitrary-precision arithmetic.

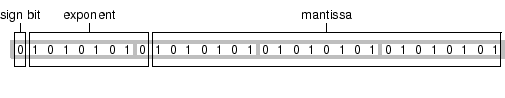

A real number is stored as a floating-point number, which means that it is stored equally two values: a mantissa, m , and an exponent, due east , in the class m 10 ii e . When a single discussion is used to store a existent number, a typical arrangement 7.iv uses 8 bits for the exponent and 23 $.25 for the mantissa (plus ane flake to bespeak the sign of the number).

The exponent generally dictates the range of possible values. Eleven bits allows for a range of integers from -127 to 127, which means that it is possible to shop numbers as small equally 10-39 (two-127 ) and as large as 1038 (2127 ). 7.5

The mantissa dictates the precision with which values tin can be represented. The issue here is non the magnitude of a value (whether it is very large of very small), simply the corporeality of precision that can be represented. With 23 $.25, it is possible to represent 223 different real values, which is a lot of values, but still leaves a lot of gaps. For example, if nosotros are dealing with values in the range 0 to one, nosotros can take steps of ![]() , which means that we cannot correspond any of the values between 0.0000001 and 0.0000002. In other words, we cannot distinguish betwixt numbers that differ from each other by less than 0.0000001. If nosotros bargain with values in the range 0 to x,000,000, we tin only accept steps of

, which means that we cannot correspond any of the values between 0.0000001 and 0.0000002. In other words, we cannot distinguish betwixt numbers that differ from each other by less than 0.0000001. If nosotros bargain with values in the range 0 to x,000,000, we tin only accept steps of ![]() , so we cannot distinguish between values that differ from each other past less than 1.

, so we cannot distinguish between values that differ from each other past less than 1.

Below are the real values 1.0 to 5.0 stored every bit 4-byte values (each row represents 1 real value). Call back that the bytes are ordered from left to correct and so the well-nigh important byte (containing the sign flake and most of the exponent) is the 1 on the correct. The first bit of the byte 2d from the correct is the last bit of the mantissa.

0 : 00000000 00000000 10000000 00111111 | 1 four : 00000000 00000000 00000000 01000000 | 2 8 : 00000000 00000000 01000000 01000000 | 3 12 : 00000000 00000000 10000000 01000000 | iv 16 : 00000000 00000000 10100000 01000000 | 5

For example, the exponent for the first value is 0111111 ane, which is 127. These exponents are "biased" by 127 then to get the terminal exponent we subtract 127 to become 0. The mantissa has an implicit value of 1 plus, for chip i , the value two-i . In this instance, the unabridged mantissa is zero, so the mantissa is merely the (implicit) value 1. The final value is 20 x 1 = 1.

For the final value, the exponent is one thousand thousand 1, which is 129, less 127 is ii. The mantissa is 01 followed by 49 zeroes, which represents a value of (implicit) 1 + 2-2 = 1.25. The final value is 22 10 1.25 = 5.

When real numbers are stored using two words instead of one, the range of possible values and the precision of stored values increases enormously, but there are still limits.

7.four.4 Instance study: Network traffic

The key IT department of the University of Auckland has been collecting network traffic data since 1970. Measurements were made on each packet of information that passed through a certain location on the network. These measurements included the time at which the package reached the network location and the size of the parcel.

The time measurements are the time elapsed, in seconds, since January ![]() 1970 and the measurements are extremely accurate, beingness recorded to the nearest ten,000

1970 and the measurements are extremely accurate, beingness recorded to the nearest ten,000 ![]() of a 2d. Over time, this has resulted in numbers that are both very big (there are 31,536,000 seconds in a year) and very precise. Figure 7.2 shows several lines of the data stored every bit plain text.

of a 2d. Over time, this has resulted in numbers that are both very big (there are 31,536,000 seconds in a year) and very precise. Figure 7.2 shows several lines of the data stored every bit plain text.

1156748010.47817 60 1156748010.47865 1254 1156748010.47878 1514 1156748010.4789 1494 1156748010.47892 114 1156748010.47891 1514 1156748010.47903 1394 1156748010.47903 1514 1156748010.47905 60 1156748010.47929 lx ... |

Past the centre of 2007, the measurements were approaching the limits of precision for floating signal values.

The data were analysed in a organisation that used 8 bytes per floating signal number (i.eastward., 64-bit floating-point values). The IEEE standard for 64-bit or "double-precision" floating-point values uses 52 bits for the mantissa. This allows for approximately 7.6 ii52 different real values. In the range 0 to i, this allows for values that differ by as little as ![]() , but when the numbers are very big, for example on the guild of one,000,000,000, it is only possible to shop values that differ by

, but when the numbers are very big, for example on the guild of one,000,000,000, it is only possible to shop values that differ by ![]() . In other words, double-precision floating-point values can exist stored with up to only 16 significant digits.

. In other words, double-precision floating-point values can exist stored with up to only 16 significant digits.

The time measurements for the network packets differ by as lilliputian equally 0.00001 seconds. Put another way, the measurements take 15 meaning digits, which means that it is possible to store them with full precision as 64-bit floating-point values, but only just.

Furthermore, with values and so close to the limits of precision, arithmetic performed on these values can become inaccurate. This story is taken up again in Section 11.v.fourteen.

7.4.v Text

Text is stored on a computer past first converting each grapheme to an integer and and then storing the integer. For example, to shop the letter `A', we volition actually store the number 65; `B' is 66, `C' is 67, and so on.

A alphabetic character is normally stored using a single byte (8 bits). Each letter is assigned an integer number and that number is stored. For case, the letter `A' is the number 65, which looks like this in binary format: 01000001. The text "hello" (104, 101, 108, 108, 111) would wait like this: 01101000 01100101 01101100 01101100 01101111

The conversion of letters to numbers is chosen an encoding. The encoding used in the examples above is called ASCII 7.7 and is peachy for storing (American) English text. Other languages require other encodings in order to let not-English characters, such as `ö'.

ASCII simply uses seven of the viii bits in a byte, so a number of other encodings are just extensions of ASCII where whatever number of the grade 0xxxxxxx matches the ASCII encoding and the numbers of the form 1xxxxxxx specify different characters for a specific set of languages. Some common encodings of this form are the ISO 8859 family of encodings, such equally ISO-8859-1 or Latin-1 for Westward European languages, and ISO-8859-2 or Latin-ii for East European languages.

Fifty-fifty using all 8 bits of a byte, information technology is merely possible to encode 256 (two8 ) different characters. Several Asian and heart-Eastern countries have written languages that utilize several k dissimilar characters (east.g., Japanese Kanji ideographs). In club to store text in these languages, it is necessary to use a multi-byte encoding scheme where more than than i byte is used to shop each character.

UNICODE is an endeavor to provide an encoding for all of the characters in all of the languages of the World. Every grapheme has its own number, often written in the form U+xxxxxx. For example, the letter `A' is U+000041 7.8 and the letter `ö' is U+0000F6. UNICODE encodes for many thousands of characters, so requires more than than one byte to store each character. On Windows, UNICODE text will typically use ii bytes per character; on Linux, the number of bytes will vary depending on which characters are stored (if the text is only ASCII it will only take one byte per character).

For example, the text "merely testing" is shown beneath saved via Microsoft'south Notepad in three different encodings: ASCII, UNICODE, and UTF-8.

0 : 6a 75 73 74 xx 74 65 73 74 69 6e 67 | but testing

The ASCII format contains exactly one byte per character. The fourth byte is binary code for the decimal value 116, which is the ASCII lawmaking for the letter `t'. Nosotros tin can see this byte design several more times, whereever there is a `t' in the text.

0 : ff fe 6a 00 75 00 73 00 74 00 twenty 00 | ..j.u.southward.t. . 12 : 74 00 65 00 73 00 74 00 69 00 6e 00 | t.e.southward.t.i.n. 24 : 67 00 | g.

The UNICODE format differs from the ASCII format in two ways. For every byte in the ASCII file, there are now two bytes, one containing the binary lawmaking we saw before followed by a byte containing all zeroes. There are as well two additional bytes at the start. These are chosen a byte order marker (BOM) and indicate the order (endianness) of the two bytes that make upwards each letter in the text.

0 : ef bb bf 6a 75 73 74 20 74 65 73 74 | ...just test 12 : 69 6e 67 | ing

The UTF-viii format is more often than not the same as the ASCII format; each letter has only one byte, with the aforementioned binary lawmaking as earlier because these are all common english language letters. The deviation is that at that place are three bytes at the start to act every bit a BOM. 7.9

seven.iv.6 Data with units or labels

When storing values with a known range, it tin can be useful to accept advantage of that knowledge. For case, suppose nosotros want to store information on gender. There are (unremarkably) only two possible values: male person and female person. One fashion to store this information would be as text: "male" and "female". However, that approach would accept upwards at least four to 6 bytes per ascertainment. Nosotros could exercise better by storing the data as an integer, with 1 representing male and ii representing female, thereby simply using as little as 1 byte per ascertainment. We could do even meliorate past using just a unmarried bit per observation, with "on" representing male and "off" representing female person.

On the other hand, storing "male person" is much less likely to lead to confusion than storing 1 or by setting a bit to "on"; information technology is much easier to think or intuit that "male" corresponds to male person. This leads united states of america to an ideal solution where simply a number is stored, merely the encoding relating "male" to 1 is also stored.

7.iv.6.ane Dates

Dates are commonly stored as either text, such every bit February 1 2006, or as a number, for example, the number of days since 1970. A number of complications arise due to a variety of factors:

- language and cultural

- ane problem with storing dates every bit text is that the format can differ betwixt different countries. For instance, the 2d month of the year is called February in English-speaking countries, but something else in other countries. A more subtle and dangerous problem arises when dates are written in formats similar this: 01/03/06. In some countries, that is the first of March 2006, but in other countries it is the third of Jan 2006.

- time zones

- Dates (a particular 24-hour interval) are commonly distinguished from datetimes, which specify not only a detail twenty-four hours, just also the 60 minutes, 2d, and even fractions of a second within that day. Datetimes are more than complicated to work with because they depend on location; mid-solar day on the first of March 2006 happens at different times for dissimilar countries (in different time zones). Daylight saving just makes things worse.

- changing calendars

- The current international standard for expressing the date is the Gregorian Calendar. Issues tin can arise considering events may be recorded using a different agenda (e.g., the Islamic calendar or the Chinese calendar) or events may have occurred prior to the beingness of the Gregorian (pre sixteenth century).

seven.4.6.2 Money

There are two major issues with storing monetary values. The get-go is that the currency should exist recorded; NZ$one.00 is very different from Us$ane.00. This issue applies of course to any value with a unit, such as temperature, weight, distances, etc.

The second issue with storing budgetary values is that values demand to be recorded exactly. Typically, nosotros want to go on values to exactly two decimal places at all times. This is sometimes solved by using fixed-point representations of numbers rather than floating-point; the problems of lack of precision practice not disappear, but they become predictable so that they tin be dealt with in a rational fashion (eastward.g., rounding schemes).

seven.four.seven Binary values

In the standard examples we have seen so far (text and numbers), a single letter of the alphabet or number has been stored in 1 or more than bytes. These are good general solutions; for example, if we desire to shop a number, but we do not know how large or minor the number is going to be, then the standard storage methods will let us to store pretty much whatever number turns upwards. Some other manner to put it is that if we use standard storage formats so nosotros practise not have to think too hard.

It is likewise true that computers are designed and optimised, right down to the hardware, for these standard formats, so information technology usually makes sense to stick with the mainstream solution. In other words, if we use standard storage formats and so we do not have to work too hard.

All values stored electronically tin can exist described as binary values because everything is ultimately stored using one or more bits; the value can be written every bit a series of 0's and one'south. Nevertheless, we will distinguish between the very standard storage formats that we have seen so far, and less mutual formats which brand use of a computer byte in more unusual ways, or even use but fractional parts of a byte.

An example of a binary format is a common solution that is used for storing colour information. Colours are often specified as a triplet of cherry, green, and blue intensities. For case, the colour (bright) "red" is every bit much red as possible, no greenish, and no bluish. We could stand for the corporeality of each colour every bit a number, say, from 0 to ane, which would hateful that a single color value would crave at to the lowest degree 3 words (12 bytes) of storage.

A much more efficient way to store a colour value is to use only a unmarried byte for each intensity. This allows 256 (ii8 ) dissimilar levels of red, 256 levels of blue, and 256 levels of green, for an overall total of more than 16 million different colour specifications. Given the limitations on the human visual system'southward ability to distinguish betwixt colours, this is more than enough different colours. 7.10 Rather than using 3 bytes per colour, often an entire give-and-take (4 bytes) is used, with the actress byte available to encode a level of translucency for the colour. Then the colour "crimson" (every bit much scarlet equally possible, no dark-green and no bluish) could be represented like this:

00 ff 00 00or

00000000 11111111 00000000 00000000

7.4.8 Retentiveness for processing versus retentiveness for storage

Paul Murrell

This document is licensed under a Artistic Commons Attribution-Noncommercial-Share Alike 3.0 License.

What Do You Call Each 1 Or 0 Used In The Representation Of Computer Data?,

Source: https://statmath.wu.ac.at/courses/data-analysis/itdtHTML/node55.html

Posted by: shiresplesn1976.blogspot.com

0 Response to "What Do You Call Each 1 Or 0 Used In The Representation Of Computer Data?"

Post a Comment